Deep learning model boosts additive manufacturing X-ray analysis

Researchers have created a deep learning model called AM-SegNet to identify critical features within molten pools of material during the additive manufacturing process. It draws on a database of over 10 000 X-ray images of additive manufacturing from ESRF beamline ID19 and other synchrotron facilities. AM-SegNet allows scientists to automatically process large radiographic datasets, thereby allowing deeper insights into complex interactions during laser-based 3D printing of metals.

Share

Additive Manufacturing (AM) or 3D printing has the potential to revolutionise traditional manufacturing methods. However, there have been reliability issues with the new technology, preventing its use for safety-critical components. Imaging technologies are crucial in understanding why these problems occur, helping to identify defects at various stages of the process and propose new printing strategies to improve the quality of components. Synchrotron X-ray imaging provides important insights into the complex physical phenomena that take place during AM. However, this method produces large volumes of images, making manual data-processing overly time-consuming.

Existing machine-learning approaches are limited in their ability to account for the nature of melt pool (molten material) dynamics, which plays an important role in determining the microstructural properties of a material. Furthermore, existing approaches rely upon data from a single synchrotron facility, reducing their general applicability for extraction of data from different methods. Researchers from University College London, UK, sought to build on prior research to create a more accurate and efficient model, trained with over 10 000 images from three different synchrotron facilities, including images obtained at beamline ID19 at the ESRF.

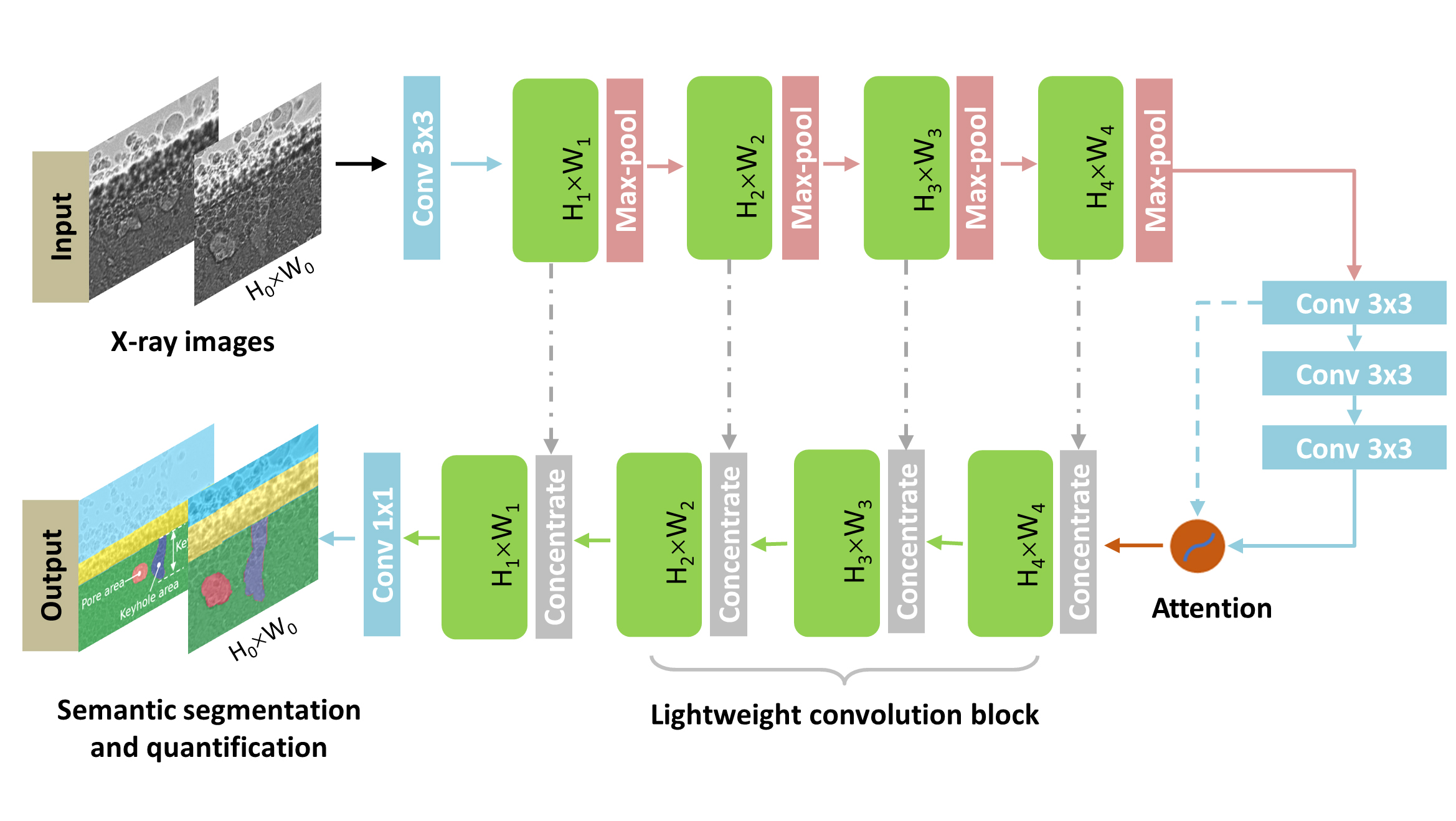

The resulting model, called AM-SegNet, has been designed for the automatic segmentation and quantification of high-resolution X-ray images. This semantic segmentation works by assigning a specific label to each pixel within the image, allowing for feature quantification and correlation across a large dataset with high confidence. Crucially, thanks to the lightweight convolution block proposed in this study (see Figure 1), this high level of accuracy has been achieved without compromising on speed. AM-SegNet has the highest segmentation accuracy (~96%) as well as the fastest processing speed (<4 ms per frame), outperforming other state-of-the-art models. A well-trained AM-SegNet was used to perform segmentation analysis on time-series X-ray images in AM experiments (see Video 1), and its application has been extended to other advanced manufacturing processes such as high-pressure die-casting, with reasonable success (see Video 2).

Fig.1: Pipeline of X-ray image processing, including flat field correction, background subtraction, image cropping and pixel labelling.

Video 1: Automatic semantic segmentation of time-series ESRF X-ray images. a) Raw X-ray images; (b) ground-truth pixel-labelling results; (c) semantic segmentation results from trained AG-SegNet.

Video 2: Automatic semantic segmentation of time-series high-pressure die-casting X-ray images. a) Raw X-ray images; (b) ground-truth pixel-labelling results; (c) semantic segmentation results from trained AG-SegNet.

In summary, AM-SegNet allows for faster and more accurate processing of X-ray imaging data, granting researchers greater insight into the AM process. Moving beyond AM, the automatic analysis achieved through this model has potential applications for many more processes in advanced manufacturing. Finally, the broad scope of data used to train AM-SegNet creates a benchmark database that can be adopted by other researchers to compare against their own models. This development anticipates a near future where high-speed synchrotron experiments will have their images segmented and quantified in real-time through deep learning.

The source codes of AM-SegNet are publicly available at GitHub (https://github.com/UCL-MSMaH/AM-SegNet).

Principal publication and authors

AM-SegNet for additive manufacturing in-situ X-ray image segmentation and feature quantification, W. Li (a,b), R. Lambert-Garcia (a,b), A.C.M. Getley (a,b), K. Kima (b), S. Bhagavatha (b), M. Majkut (c), A. Rack (c), P.D. Lee (a,b), C.L.A. Leung (a,b), Virtual Phys. Prototyp. 19(1), e2325572 (2024); https://doi.org/10.1080/17452759.2024.2325572

(a) Department of Mechanical Engineering, University College London, London (UK)

(b) Rutherford Appleton Laboratory, Research Complex at Harwell, Didcot (UK)

(c) ESRF