- Home

- Users & Science

- Scientific Documentation

- ESRF Highlights

- ESRF Highlights 2012

- Enabling technologies

- Disk based data storage at the ESRF – Striking the ideal balance between performance and functionality

Disk based data storage at the ESRF – Striking the ideal balance between performance and functionality

In 1994, the Networked Interactive Computing Environment (NICE) was set up for use by experiments at the beamlines, which routinely store and process large volumes of data, ranging from theoretical simulations to sophisticated image processing. Visitors arriving at the ESRF are given a NICE account and their experimental data is stored in a large shared area offering a massive 1 PetaByte of disk space and for a duration of fifty days after the end of the experiment. ESRF staff scientists use other dedicated areas offering a further 500 TeraBytes of disk space. Visitors’ raw and processed data can be exported to the home laboratory either via the Internet or by using removable storage devices such as USB disks.

|

|

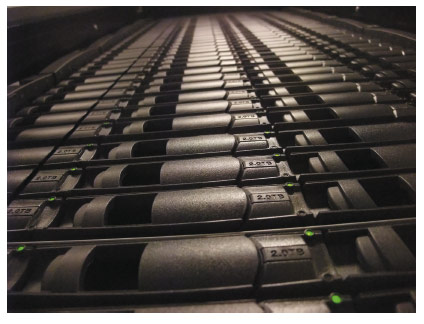

Disk array. |

The ESRF is equipped with two state-of-the-art data centres allowing the physical separation of disk-based storage and tape-based backups. NICE, in addition to disk storage, also has incremental tape backups which are made overnight on a daily basis as a protection against accidental deletion of data. A full back up is made prior to the removal of data from the disks after the fifty-day period following the actual experiment and is kept for another six months. Experimental data is processed using high performance computers – the so-called compute clusters. A data communication network provides multiple high-speed links between the data producers, the central disk storage, the compute clusters, and the data analysis workstations. For the last few years, single connections for computers have run increasingly on 10 Gbit/second fibre-optic links.

The main cloud on the horizon is the central disk storage which recently became a frequent bottleneck in this topology. This is due to the large number of powerful computers in the compute clusters draining disk bandwidth during data analysis and, therefore potentially disrupting the writing of data from the beamlines. Overcoming this hurdle entails having an optimal vision of all the constraints inherent to a central disk storage facility, from the ever increasing quantity of large-sized detector images, the need for fast simultaneous access from multiple data producers, ease of operation, compatibility with many different client operating system and an extreme resilience to guarantee a non-stop 24/7 operation. In 2011, after an unsuccessful attempt to find a 1 PetaByte high-performance system, the ESRF had to lower its sights and adopted a somewhat slower but highly-reliable solution of 500 TeraBytes. This was in order to attain compatibility with in-house resources and a high level of reliability to minimise the risk of exposing the facility to a major failure (potentially provoking severe consequences on operation, with a worst case scenario of down time for up to several days before restoring normal operation). This storage system is based on the NFS protocol guaranteeing compatibility with the large variety of client operating systems at the ESRF.

This strategic choice allows individual data streams of up to 500 MBytes/sec and an aggregated performance of 2 GBytes/sec. During the first run of 2012, a massive 350 TB of data were produced in less than three months. Single experiments in excess of 10 TB are now commonplace. Positive feedback from users on the ease of use and the flexibility of the shared space has encouraged us to increase its capacity. A further 500 TB were added at the end of 2012.

As part of the ESFRI (European Strategy Forum on Research Infrastructure) roadmap, ESRF is involved in the CRISP project [1]. The ESRF’s participation in this project includes work on high-speed data transfers based on a local buffer storage system inserted between the detector and the central disk systems to absorb peaks in data acquisition. It is expected that this will offload the central disk system while providing guaranteed performance levels in the GByte/sec range.

In parallel with this upgrade, it is necessary to replace the older central disk systems used for ESRF in-house research and general purpose storage. Given that only three beamlines occupy most of the space, it was decided to evaluate another tactic and set up small dedicated private storage servers (40-50 TB capacity per server) for these demanding beamlines. In addition to cost considerations, this solution affords several advantages, notably:

- high flexibility in terms of disk space, when more space is required additional storage servers can simply be added

- dedicated bandwidth to access storage resources for the beamlines

- higher aggregated performance: every private storage server adds its own high speed link to the overall available bandwidth

However this scenario has some potential stumbling blocks as private storage servers are less reliable and, in case of failure, a manual operation has to be performed to store data on a standby system. Beamlines will have to manage their own disk space and be vigilant to ensure that “disk full” status is not reached as this might stop on-going experiments or interrupt data analysis. Also daily incremental backup to tape will no longer be technically feasible.

The three most demanding beamlines in terms of data storage –ID19, ID15 and ID22– have recognised the potential of this approach and agreed to take it on board. Tests will start on ID19 which will be equipped with two private storage servers at the beginning of 2013. Should tests prove conclusive, then a number of such dedicated servers will be rolled out in the course of 2013 to replace the ageing central disk systems holding the in-house research data.

To conclude, the ESRF has taken a prudent approach by opting for solutions that combine reliability, flexibility and performance to satisfy increasingly challenging storage demands. Keeping abreast of market trends through a constant exploration and analysis of available solutions should enable the ESRF to secure future storage requirements, to the mutual satisfaction of scientists and the IT engineers who guarantee the smooth running of the system.

|

|

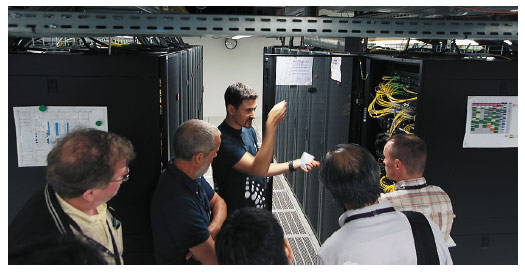

Visitors enjoying a guided tour of the data centres during SRI 2012. |

Authors

B. Lebayle, D. Gervaise, C. Mary and R. Dimper

ESRF

References

[1] Crisp: http://www.crisp-fp7.eu/about-crisp/esfri/